Our motivation at Promyze was to have an overview of "what's a typical public PR on GitHub ?". This platform is used today by a large panel of organizations: single developers for their side projects, private companies, open-source organizations, universities, and so on. Our purpose is not to highlight data on PRs management in private companies, with teams working 40 days a week together, but instead to provide a humble overview of public data available on GitHub.

Dataset construction

We used the GitHub REST API to browse public organizations. Then, within each of them, we searched for a pull request that matched the following criterion:

- PRs must be closed and merged. Those that are not merged may be due to lack of interest or because authors left the project, so the PR was closed. They do not necessarily provide interest for us, so they've been skipped.

- PRs were opened after the beginning of 2019. As we consider metrics such as stars and contributors, we wanted some recent data.

- We skipped fork repositories, as it could impact the diversity of the collected data.

- PRs contains at least 1 source code file

We ran queries on the REST API for few days, and finally gathered 10,193 Pull Requests from distinct organizations.

🗨️ Size of the PR

To evaluate the size of the PR, we computed the following metrics:

- commits: The number of commits

- files: The number of files that underwent changes

- lines_added: The number of lines added (marked as '+' in the diff)

- lines_removed: The number of lines of code removed (marked as '-' in the diff)

- chunks: The number of chunks of code, a chunk being a block of removed and/or added line.

And here are the extracted values:

| Metric | Average | 25% | Median | 75% |

|---|---|---|---|---|

| commits | 3,26 | 1 | 1 | 3 |

| files | 35,8 | 1 | 3 | 10 |

| lines_added | 3,144 | 3 | 21 | 122 |

| lines_removed | 1,781 | 1 | 4 | 260 |

| chunks | 60 | 1 | 4 | 16 |

We can observe that at least 75% of the PRs have a reasonable size for a code review, as the state of the art recommends no more than 500 lines of code. But, spoiler: all of these PRs won't be necessarily reviewed!

The average values are quite large because some outliers have extremely high values.

👥 Participants in Comments

Developers write comments on a PR to start discussions on its content, ask for clarifications or suggest improvements. We wanted to highlight data regarding those discussions.

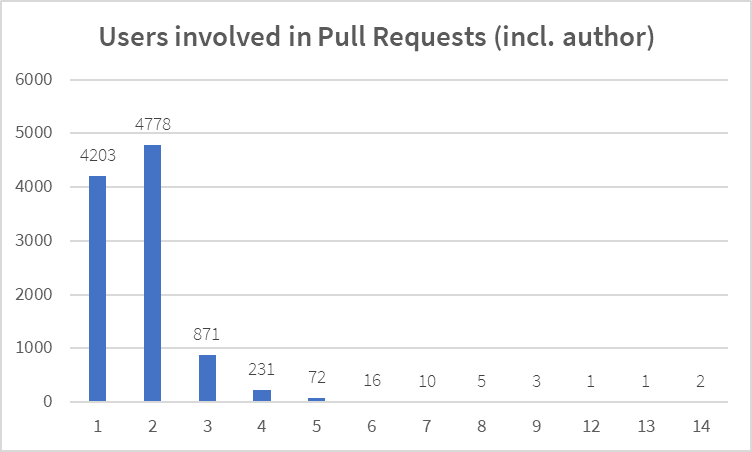

First of all, how many users participate in the PR discussion? (including the author of the PR and Bots as we will discuss below). Here are the key insights:

- 58,8 % of the PRs (5990) involve at least two persons, not only the author of the PR;

- 46,9 % of the PRs involve two persons which is thus the most common pattern observed in our data.

31,2 % of the PRs involve only the author, this shows that Pull Requests are not always collaborative workspaces.

Comments can also be automatically generated by Bots, which are applications that GitHub users can set up on their projects to perform quality or security analysis for instance.

We found that 748 PRs (7,33 % of our dataset) have at least one comment from a Bot. On this sample, only 50 PRs involved more than one Bot (45 have 3 bots and 5 have 3 Bots).

📏 Size of the discussions

What about the number of comments in the PR?

Here are the main insights:

- 7202 PRs (70,65 %) do not have any comments ;

- 1356 PRs have a single comment ;

- 1402 PRs have between 2 and 10 comments ;

- 233 PRs (2,3 %) have more than 10 comments.

In most cases, discussions (when there exist) are quite short and go seldom beyond 10 comments.

✅ Approvals

We wanted to investigate more on the approval feature offered by GitHub.

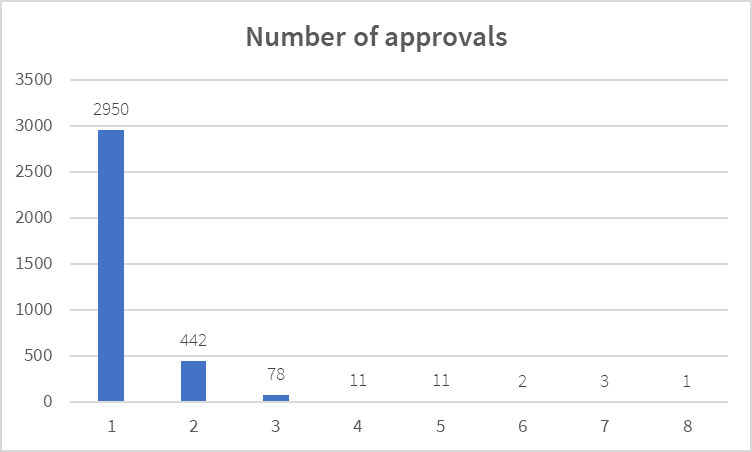

We found that 34% of the PRs have at least one approval.

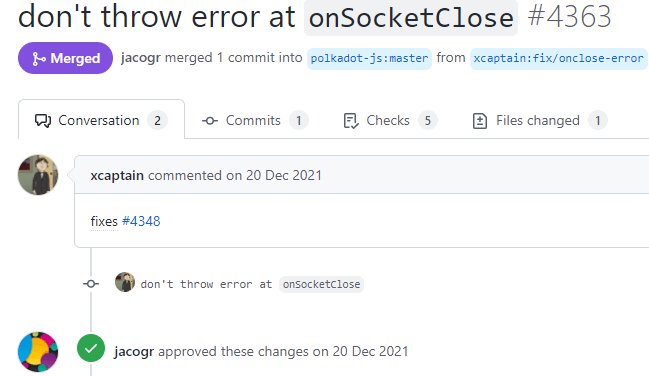

In this example, the user "jacogr" indicated an approval on the PR:\

As you may know, multiple users can be required on a PR for approval. For PRs getting at least one approval, representing 3,498 PRs, here is the distribution of the number of approvals.

As you can see, 84,33 % of the approved PRs have a single developer that approved the PR. 0,8 % of the PRs have at least 3 contributors.

🔎 PR Lifetime and Wait Time Per Line of code

We compute the PR lifetime as the duration between its creation and the moment it's been merged and closed.

We found that 2,861 PRs (28,60%) have a zero-minute lifetime, meaning they are either automatically merged or instantly manually merged by a user. 238 PRs of this sample involve more than 1 user, meaning that about 1/4 of the PRs are opened just in order to merge code from one branch to another, without any validation process.

For the rest of the PRs, having a lifetime > 0 minutes, here are the insights:

- 3,885 PRs have a lifetime ≤ 6 hours

- 1,097 PRs have a lifetime between 6 hours and a day

- 1,014 PRs have a lifetime between 1 day and 7 days

- 520 Prs have a lifetime between 7 days and 30 days

- 306 PRs have a lifetime >30 days (115 more than 110 days!)

Even though a majority is merged within 6 hours, there are still 18,05 % of the PRs that wait more than a day to be merged.

⏱️Wait Time Per Line of code

Consider now the wait time per line of code metric, which reveals how long a line of code waits to be merged. The lower this value is, the most the latency is reduced. A value if 0 means a line of code is instantly merged. A PR of 10 new lines added and merged in 10 minutes will have higher throughput than a PR with 2 lines added in the same timeframe.

For readability reasons, we decided to skip "zero-minute PRs".

Here is the following distribution in minutes:

| Decile | 10% | 20% | 30% | 40% | Median | 60% | 70% | 80% | 90% |

|---|---|---|---|---|---|---|---|---|---|

| Wait time per line of code | 0,07 | 0,39 | 1,21 | 3,71 | 10,5 | 28 | 83,84 | 273,5 | 1362 |

This is highly heterogeneous depending on the projects. The median value indicates that on average a line of code waits 10,5 minutes before being merged.

🔎Focus on a sample of projects

As we already said, projects on GitHub are heterogeneous: personal projects, students projects, open-source projects maintained by private companies, ... We'd like now to narrow the focus on PRs from projects where we assume some structural rules have been set due to their size:

- At least 10 contributors

- At least 50 stars

We're aware these are arbitrary values, but this seems quite fair to us. This sample represents 1,994 PRs (19,5% of the original dataset), we'll call it "Category A".

Let's compare the main metrics with another population of projects having both less than 10 contributors and less than 50 stars representing 6,560 PRs in our dataset (64,3 % of our dataset), we'll call it "Category B".

| Metric | Category A | Category B | ||

|---|---|---|---|---|

| Average | Median | Average | Median | |

| files | 19,78 | 3 | 39,48 | 3 |

| chunks | 42,9 | 4 | 58,05 | 5 |

| commits | 3,41 | 1 | 2,94 | 1 |

| lines_added | 318,83 | 11 | 4565,91 | 28 |

| lines_removed | 354,82 | 4 | 2479,48 | 4 |

| comments | 2,94 | 1 | 0,61 | 0 |

| bots | 0,13 | 0 | 0,05 | 0 |

| pr_lifetime_in_hours | 222 | 16 | 75,97 | 0 |

If we look at the other remaining metrics:

| Category A | Category B | |

|---|---|---|

| approved PR | 1,100 (55,2 %) | 1,614 (24,6%) |

| zero-minute PR | 72 (3,6%) | 2,624 (40 %) |

Not so surprisingly, we can observe that in the Category A projects, the comments and approvals are more frequent. Twice more PRs have approval in this population, and it's even more than half of the sample. Also, we observe that there's a small minority of zero-minute PRs in that sample, meaning projects tend to set up a validation process in their PR.

We observe that PRs' content in category A is more atomic than in category B. The median number of lines added is 11 vs 28, and on average, the differences are much more significant in terms of lines added, removed, and files involved.

🚀 Next steps

That's all folks, feel free to comment if you have any suggestions or questions regarding this dataset.

Want to go deeper on Pull Requests? Find out how to save time during your code reviews on GitHub.

Top comments (0)